Yes, the heading is absolutely correct. Gitlab is moving from Microsoft Azure to Google Cloud Platform (GCP). I know what you might be thinking, but this decision wasn't made after Microsoft decided to buy Github. But, all this migration was being planned even before that. Most of the things in this article are taken from the original article of Gitlab: Here

They believe Kubernetes is the future. It's a technology that makes reliability at massive scale possible. This is why earlier this year they shipped native integration with Google Kubernetes Engine (GKE) to give GitLab users a simple way to use Kubernetes. Similarly, they've chosen GCP as their cloud provider because of their desire to run GitLab on Kubernetes. Google invented Kubernetes, and GKE has the most robust and mature Kubernetes support. Migrating to GCP is the next step in only plan to make GitLab.com ready for their mission-critical workloads. It's what they think.

I think even if they continue to run Gitlab on Azure over the Kubernetes Cluster, there might not any problem. But rather they decided to move on the side of the expert or creator of the Kubernetes. Right now GKE is preety cheap if consider the pricing of the running similar kinds of workloads on both of the Cloud platforms. Plus, GCP is providing quite stable Kubernetes service compared to others and there should be no doubt in that. Let's see what benefits they are getting after this migration.

Once the migration has taken place, they’ll continue to focus on bumping up the stability and scalability of GitLab.com, by moving their worker fleet across to Kubernetes using GKE. This move will leverage their Cloud Native charts, which with GitLab 11.0 are now in beta.

How they’re preparing for the migration

Geo

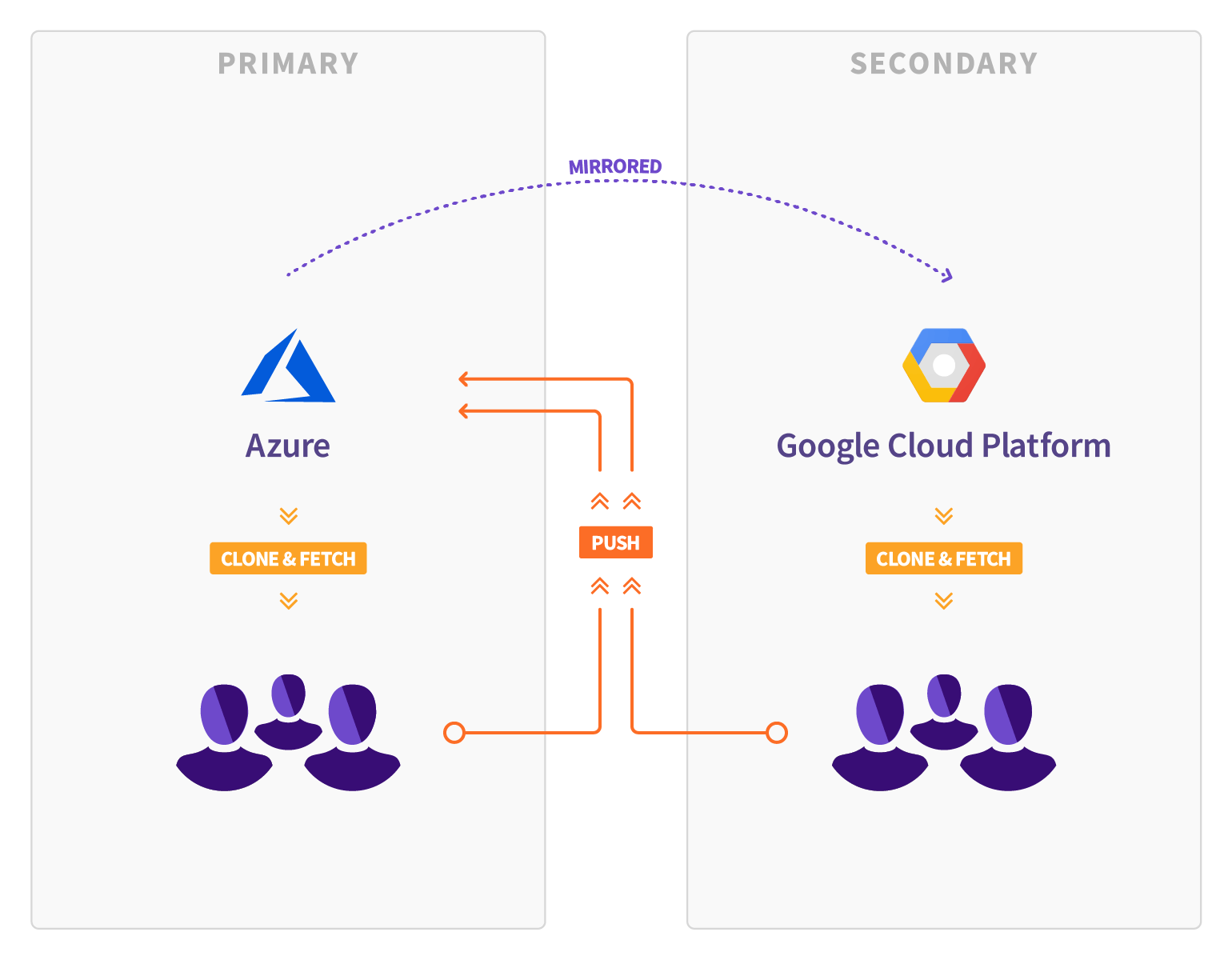

One GitLab feature they are utilizing for the GCP migration is their Geo product. Geo allows for full, read-only mirrors of GitLab instances. Besides browsing the GitLab UI, Geo instances can be used for cloning and fetching projects, allowing geographically distributed teams to collaborate more efficiently.

Not only does that allow for disaster recovery in case of an unplanned outage, Geo can also be used for a planned failover to migrate GitLab instances.

They are using Geo to move GitLab.com from Microsoft Azure to Google Cloud Platform. They believe Geo will perform well during the migration and plan this event as another proof point for its value.

Read more about Disaster Recovery with Geo in their Documentation.

The Geo transfer

For the past few months, they have maintained a Geo secondary site of GitLab.com, called

gprd.gitlab.com, running on Google Cloud Platform. This secondary keeps an up-to-date synchronized copy of about 200TB of Git data and 2TB of relational data in PostgreSQL. Originally they also replicated Git LFS, File Uploads and other files, but this has since been migrated to Google Cloud Storage object storage, in a parallel effort.

For logistical reasons, they selected GCP's

us-east1 site in the US state of South Carolina. Their current Azure datacenter is in US East 2, located in Virginia. This is a round-trip distance of 800km, or 3 light-milliseconds. In reality, this translates into a 30ms ping time between the two sites.

Because of the huge amount of data they need to synchronize between Azure and GCP, they were initially concerned about this additional latency and the risk it might have on their Geo transfer. However, after their initial testing, they realized that network latency and bandwidth were not bottlenecks in the transfer.

Object storage

In parallel to the Geo transfer, they are also migrating all file artifacts, including CI Artifacts, Traces (CI log files), file attachments, LFS objects and other file uploads to Google Cloud Storage (GCS), Google's managed object storage implementation. This has involved moving about 200TB of data off their Azure-based file servers into Google Cloud Storage.

Until recently, GitLab.com stored these files on NFS servers, with NFS volumes mounted onto each web and API worker in the fleet. NFS is a single-point-of-failure and can be difficult to scale. Switching to GCS allows us to leverage its built-in redundancy and multi-region capabilities. The object storage effort is part of their longer-term strategy of lifting GitLab.com infrastructure off NFS. The Gitaly project, a Git RPC service for GitLab, is part of the same initiative. This effort to migrate GitLab.com off NFS is also a prerequisite for their plans to move GitLab.com over to Kubernetes.

How they're working to ensure a smooth failover

Once or twice a week, several teams, including Geo, Production, and Quality, get together to jump onto a video call and conduct a rehearsal of the failover in their staging environment.

Like the production event, the rehearsal takes place from Azure across to GCP. They timebox this event, and carefully monitor how long each phase takes, looking to cut time off wherever possible. The failover currently takes two hours, including quality assurance of the failover environment.

This involves four steps:

- A preflight checklist,

- The main failover procedure,

- The test plan to verify that everything is working, and

- The failback procedure, used to undo the changes so that the staging environment is ready for the next failover rehearsal.

Since these documents are stored as issue templates on GitLab, they can use them to create issues on each successive failover attempt.

As they run through each rehearsal, new bugs, edge-cases and issues are discovered. They track these issues in the GitLab Migration tracker. Any changes to the failover procedure are then made as merge requests into the issue templates.

When will the migration take place?

GitLab will be making the move on Saturday, July 28, 2018.

Comments

Post a Comment